Summary

Earlier this year, Apple released the 2020 iPad with a special feature: a built-in LiDAR sensor. In addition to providing wide-ranging benefits for mobile photography, the new sensor sought to take augmented reality (AR) to the next level. Features like dynamic occlusion and surface scanning promised to unlock a new level of interactivity for AR applications.

Shortly after the release, vGIS—a leading developer of augmented reality and mixed reality (MR) solutions for BIM, GIS, and Reality Capture—conducted an evaluation to determine whether the new LiDAR sensor improved spatial-tracking capabilities. The evaluation was made in light of the main vGIS use case of displaying digital twin data for maintenance workers, engineers, and construction crews.

At that time, we discerned no significant improvements in the iPad’s spatial tracking abilities as compared to other devices, including previous generations of the iPhone. Over time, though, we noticed a few areas where the LiDAR-equipped iPad seemed to work just a tad better than we had thought, and the release of the iPhone 12 Pro provided the incentive we needed to perform a second test. We reformulated our hypothesis and re-evaluated the system using a new methodology.

Methodology

Our goal was to test the performance of the LiDAR-equipped iPhone 12 Pro and to retest the LiDAR-equipped 2020 iPad to determine whether the devices with built-in LiDAR sensors improved on a few features relevant to the vGIS app.

Unlike the first test, which was limited to a long walk along a single closed-loop course, the second test included a few other checks as well, such as checks of nighttime performance and on the accuracy of distance tracking.

As in the case of the original test, we set up a closed-loop course with sharp turns that was about 200 meters (650 feet) long. We also prepared a straight line-track that was precisely 50 meters (164 feet) long for both one-way and round-trip accuracy testing. We set the distances with the Leica FLX100 high-accuracy GNSS.

We tested the iPhone 12 Pro with LiDAR; the 2020 iPad with LiDAR; the Samsung Galaxy Note10+ with a ToF sensor; and the Google Pixel 4XL, which relies entirely on optical tracking with a single camera. To ensure consistency of tracking, the devices were affixed to the same mount. We alternated their position on the mount to make sure that their relative positions did not affect the results.

The tests were done using the vGIS system without an external GNSS. The configuration forced the devices to rely entirely on their AR tracking algorithms.

The test covered the following aspects:

- Surface-scanning accuracy

- Straight-line distance tracking

- Closed-loop distance tracking

- Error-correction capabilities

- Consistency in different light conditions

- Quality of the AR experience

At the end of each test, we recorded the performance of each device as measured by the deviation from a waypoint or from expected performance. The tests were performed in normal (overcast) light conditions.

Optical tracking vs LiDAR vs ToF

Our roster of test devices included phones that feature the three technologies currently available in consumer devices:

- Optical tracking

- LiDAR

- Time of Flight (ToF) sensor

Before looking at the results, let’s review what each technology represents.

Modern AR-enabled devices use real-time video-stream analysis to track the position of the device in space. By analyzing visual elements appearing in video frames, sophisticated algorithms estimate the shapes of the objects that enter the view of the camera. Sometimes, optical tracking is supported by other internal sensors, like a gyroscope or an accelerometer.

In our tests, the Google Pixel 4XL represented the kind of device that relies solely on optical tracking. The 2020 iPad and the iPhone 12 Pro each have optical tracking capabilities as well as built-in LiDAR. The Samsung Galaxy Note10+ has the same capabilities as the Google Pixel 4XL, as well as a ToF sensor.

LiDAR and ToF sensors rely on the same mechanism to measure the distances from the device to surrounding objects. Using infrared lasers, they measure the time it takes for light to travel from the device to the target surface and back, information that is used to calculate the distance to that point on the surface of the object. Knowing the distances to multiple points on the surrounding surfaces enables the device to create 3D point clouds of the environment around the user.

Although the basic operating principle is the same, LiDAR and ToF capture 3D information differently.

Apple uses a solid-state LiDAR that creates a fine grid of points, with the distance to each point measured individually. As the device is shaken or moved, LiDAR scans different points on the surrounding surfaces, creating a point cloud or 3D imprint of the surrounding objects.

Unlike the point-by-point mapping of LiDAR, ToF emits a pulse of light to illuminate a larger area all at once, collecting information that bounces off multiple surfaces within the illuminated area.

The infrared light recording of the devices we tested. The iPad LiDAR and the iPhone LiDAR emit point grids. The sensor of the Note10+ ToF flashes scattered light. The Pixel uses only its cameras.

The advantage of the LiDAR approach is its superior ability to remove noise generated by the reflected light. When light is reflected back to the sensor, the sensor receives not only a direct reflection from the intended point but also many indirect reflections, off-angle reflections, and reflections caused by the various surface materials and complex surfaces in the environment. Although both LiDAR and ToF sensors clean incoming data to minimize the impact of noise, the LiDAR’s process of mapping the area point by point is better at filtering out faulty readings than a single flash of ToF that illuminates a much larger area at once.

Results

Surface Scanning

Surface scanning is a key background process in vGIS, enabling critical functionality and improving the accuracy of AR visuals. Note10+ seems to find surfaces that the other phones cannot. It was time for a more scientific test to determine whether this is really so.

We reviewed how well devices performed in determining the elevations of various surfaces:

- Glossy hardwood.

- Nearby surfaces (e.g., a tabletop about 30 centimeters or one foot from the device).

- A nonreflective floor with distinct features.

- A complex grass surface.

- Concrete with distinct features.

- Even pavement without distinct anomalies.

For each device and surface type, we captured the number of successful detection attempts, relative accuracy, and the number of false positives.

| 2020 iPad Pro | iPhone 12 Pro | Galaxy Note10+ | Pixel 4XL | |

|---|---|---|---|---|

| Glossy hardwood | Detection: 100% Accuracy: high False-positive: 0% | Detection: 100% Accuracy: high False-positive: 0% | Detection: 100% Accuracy: Low False-positive: 60% | Detection: ~90% Accuracy: mid False-positive: 0% |

| Near surfaces | Detection: 0% Accuracy: low False-positive: 100% | Detection: 0% Accuracy: low False-positive: 100% | Detection: 100% Accuracy: high False-positive: 0% | Detection: ~10% Accuracy: mid False-positive: 0% |

| Non-reflective | Detection: 100% Accuracy: high False-positive: 0% | Detection: 100% Accuracy: high False-positive: 0% | Detection: 100% Accuracy: high False-positive: 0% | Detection: ~70% Accuracy: mid False-positive: 0% |

| Grass | Detection: 100% Accuracy: high False-positive: 0% | Detection: 100% Accuracy: high False-positive: 0% | Detection: 100% Accuracy: high False-positive: 0% | Detection: ~80% Accuracy: mid False-positive: 0% |

| Concrete | Detection: 100% Accuracy: high False-positive: 0% | Detection: 100% Accuracy: high False-positive: 0% | Detection: 100% Accuracy: high False-positive: 0% | Detection: ~80% Accuracy: mid False-positive: 0% |

| Pavement | Detection: 100% Accuracy: high False-positive: 0% | Detection: 100% Accuracy: high False-positive: 0% | Detection: 100% Accuracy: high False-positive: 0% | Detection: ~70% Accuracy: mid False-positive: 0% |

The iPad and the iPhone performed almost identically in all conditions. Surprisingly, these LiDAR devices could not identify the desk surface, consistently placing it almost a meter farther away than it was. The results suggest that the minimum distance required to achieve effective LiDAR scanning is farther than it is for other devices. We think that this is probably due to the requirements of converting LiDAR grid points.

In most environments, the ToF-equipped Galaxy Note10+ performed as well or better than the iOS devices. The Galaxy Note10+ performed better than the iPhone and the iPad in identifying the desk surface, achieving a 100% detection rate with high accuracy and no false positives. On the other hand, it struggled with glossy hardwood, resulting in incorrect measurements of elevation in almost 60 percent of our tests. The pattern of hits and misses suggests that identification was impaired when external light was being reflected. In soft, evenly lit environments, the Note10+ performed well.

Of all the devices we tested, the Pixel 4XL did the worst job of detecting surfaces. It struggled with nearby surfaces, unable to detect surfaces that were just 30 centimeters away. When it did manage to detect a surface, the Pixel 4XL did a reasonably good job, deviating from its LiDAR and ToF competitors by only a small margin. However, it could detect a surface only if the camera was moving in relation to that surface. When the camera was stationary, consistently failed to detect surfaces; as soon as some shaking or movement was introduced, the detection algorithms kicked into gear and the device correctly measured the distances.

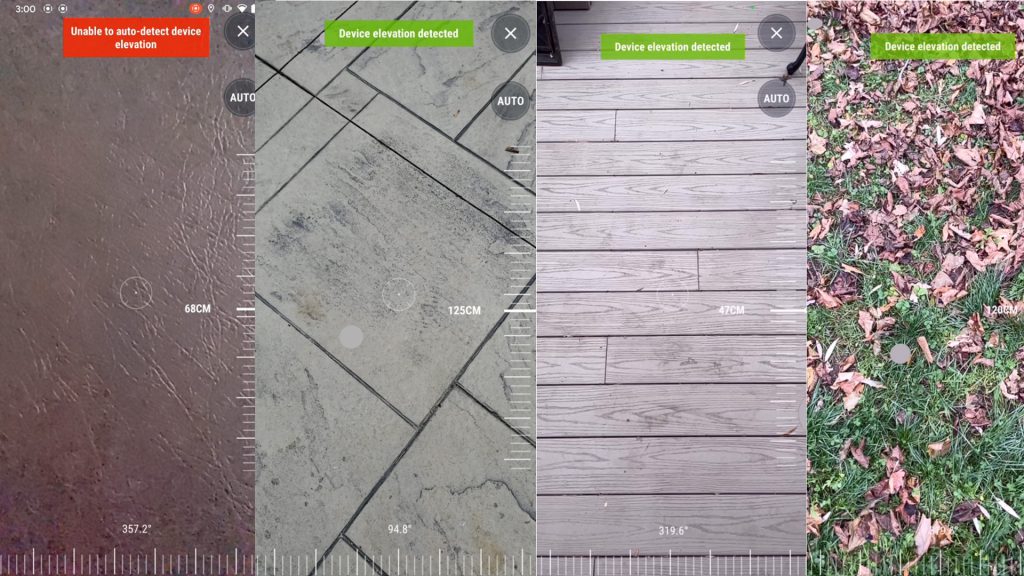

Surface detection tests

In distance-tracking tests, the Pixel often misidentified its height relative to the road surface, forcing visuals to appear higher or lower than they should. The problem occurred so often that we ended up disabling the Pixel’s surface scanning during the distance test; the misalignment was interfering with our ability to conduct the test.

Winners: the iPhone and the iPad tying for first, with the Note10+ a close second.

Straight-line distance tracking (50m)

| 2020 iPad Pro | iPhone 12 Pro | Galaxy Note10+ | Pixel 4XL | |

|---|---|---|---|---|

| Accuracy | 1.8m | 1.9m | 0.7m | 1.2m |

| Precision | 1.2m | 1.2m | 1.1m | 1.9m |

Smaller is better

Accuracy and precision

In this test, the Note10+ was number one in terms of both accuracy and precision. The results fell consistently closer to the 50-meter mark, giving the Note10+ an indisputable lead in accuracy tracking. The results also formed a tighter circle around the finish point, giving Note10+ first place in precision as well.

The iPad and the iPhone managed to stay very consistent in underestimating the distance, so that both devices were second to the Note10+ in precision, but were behind all other devices in accuracy.

Finally, the Pixel came in second in the accuracy tests. But the spread of its finish positions was much wider than that of the other devices, so that it scored dead last in precision.

Winner: Note10+

Closed-loop distance tracking

The closed-loop test had two tracks:

- A round trip from the straight-line test (there and back) with a total distance of 100 meters (328 feet).

- A closed-loop course of about 200 meters.

The shorter track was easier for the devices to navigate, since that track had two straight paths along the same segment between two points. Given the distance between the points (50 meters or 164 feet), it was possible for the devices to remember the scanned surfaces and to adjust to compensate for the drift accumulated during the walk.

| 2020 iPad Pro | iPhone 12 Pro | Galaxy Note10+ | Pixel 4XL | |

|---|---|---|---|---|

| Accuracy | 0.9m | 0.9m | 0.8m | 1.5m |

| Precision | 0.8m | 0.8m | 1.4m | 6.4m |

Smaller is better

The results were similar to the results of the straight-line test: the Note10+ finished first in accuracy and second precision; the iPad and the iPhone were a very close second in accuracy and the best in precision; the Pixel was last in both categories. Surprisingly, the round trip proved to be a greater challenge for the Pixel: its finish points were scattered over a large area, on one occasion finishing 4.6 meters away from the expected destination.

The longer closed-loop track was more challenging for all of the devices than the straight-line track. Because of the layout of the course (which consisted of sharp turns, stretches of pavement without any noticeable marks, and so forth), the tracking abilities of each device were pushed to their limits.

| 2020 iPad Pro | iPhone 12 Pro | Galaxy Note10+ | Pixel 4XL | |

|---|---|---|---|---|

| Accuracy | 2.1m | 2.2m | 1.9m | 2.3m |

| Precision | 2.8m | 2.7m | 3.1m | 9.7m |

Smaller is better

With respect to the ranking of a device, the results of using a device’s system over the longer course were the same as the results of using it over the shorter course. The accumulated drift was significantly greater, and the randomness of the Pixel’s tacking was much greater. In one test, it managed to deviate 19 meters during the 200-meter walk.

Winner: Note10+

Drift-correction capabilities

As we tested the accuracy and precision of movement tracking, we also reviewed whether devices were able to recognize previously visited places and to use this information in correcting the drift. The error-correction capabilities of each device were mostly determined by the ability of each device to retain the key information of the test area. There is a trade-off between retaining more information on the one hand, thus enabling potentially superior drift correction, and, on the other hand, freeing up memory to ensure performance. Each framework—ARKit from Apple and ARCore from Google—handles the balance differently, as was evident from the results. This particular component directly relates not to the scanning ability of each technology but rather to how different frameworks record, retain, and release information about the surroundings of the device.

| 2020 iPad Pro | iPhone 12 Pro | Galaxy Note10+ | Pixel 4XL | |

|---|---|---|---|---|

| Drift correction rate | <20% | <20% | >95% | >80% |

Both the Note10+ and the Pixel did a respectable job identifying the starting point at the end of both the short track and the long track; however, it usually took the Pixel two to five seconds longer to correct for drift. In two instances, Pixel falsely recognized the starting point, suddenly moving visuals to a random location.

In the same tests, the iPad and the iPhone rarely applied drift correction. The results suggest that Apple prioritizes fluidity of AR experience over other aspects, and that it targets smaller indoor settings as its main use case.

Winner: Note10+

Reliability in low-light conditions

Even before the tests, the difference in performance between iOS and Android in difficult conditions was noticeable. Relying mostly or entirely on optical tracking, Android devices begin to go out of focus in dim or challenging light. As soon as the device loses focus, or as soon as it is pointed at an evenly painted surface or the environment becomes too dark, Android-based AR visuals start to float away, eventually sticking to the screen. Android-based AR simply doesn’t work in dark or challenging environments.

Apple devices, on the other hand, do a very good job of tracking a device’s movements even in complete darkness. iPhones and iPads—even models without LiDAR—managed to track movements with minimal drift over small distances (i.e., distances less than 20 meters or 65 feet).

The video below shows the performance of these devices in complete darkness. When the devices are waved from side to side, it is clear that the iPhone and the iPad continuously track movements, whereas the Android device simply abandons any attempts at tracking.

To say the least, the performance of Android in such conditions is disappointing when one considers the fact that iOS managed to achieve the same tricks in older, non-LiDAR phones, including the iPhone X and the iPhone 11. The explanation of the results is probably that Android uses video feed as the primary source of information about the device’s movements. When there is no reliable video feed, the device simply disables tracking. The inability of Android to track its surroundings when it can no longer identify surface features poses a problem for Android devices even in less demanding indoor applications.

Winner: iPhone and iPad

Quality of the AR experience

The side-by-side comparison once again allowed us to review the quality of the AR experience. The outdoor use of the vGIS application goes beyond what each AR framework has been targeted to address, pushing each device to its limits in a way that helps us to understand the design choices behind each framework.

The iPad and the iPhone provided a noticeably smoother AR experience. The AR view on the iOS devices seemed more fluid and responsive. Indeed, AR on iOS performed almost as smoothly as the device’s native camera app. We were impressed by the consistent fluidity of the user experience.

If viewed in isolation, Pixel-based AR can be regarded as performing almost as well. But in a side-by-side comparison, the occasional freezes and jumps become noticeable.

In contrast, the Note10+ consistently felt as if it were struggling to process the AR. At the starting point, the performance of the three devices was almost the same. However, as time passed or distance increased, the Note10+ visuals rapidly became grainy, and the frames began to freeze and skip.

Winner: iPhone and iPad

Final Thoughts

This second test confirmed the similarities and differences in the approaches taken by Apple, Google, and the OEMs that use ARCore. Although we made the most of our observations in an informal setting, prior to conducting formal side-by-side comparisons, the comprehensive side-by-side tests offered the clearest view yet of the pros and cons of each framework and device.

Indisputably, devices with ToF or LiDAR (the Note10+, the iPhone 12 Pro, and the 2020 iPad Pro) have superior surface-detection capabilities. The Pixel 4XL did an admirable job finding surfaces by using only the optical processing of a single camera. But the Pixel 4XL couldn’t keep up with the other devices, offering substandard results on a frustratingly regular basis.

Although excelling the Pixel 4XL, the ToF technology of the Note10+ couldn’t match the iPhone and iPad’s LiDAR with respect to the quality, accuracy, and consistency of surface detection. Although the ToF sensor suffered an occasional hiccup, the LiDAR was rock-solid and blazingly fast at detecting and tracking its surroundings.

The surprising discovery was the unrefined AR experience provided by the Note10+. Despite having almost the latest top-of-the-line CPU and the most RAM, it produced a grainy AR experience that was further marred by constant freezing and frame skipping.

Although the Pixel 4XL maintained a moderately good performance, it suffered from its lack of supporting sensors. This deficiency resulted in inconsistent surface scanning, false positives, and false negatives that regularly caused the visuals to jump up in the air or sink below the road surface; and it resulted in less accurate and less precise spatial tracking.

Without a doubt, the iPhone 12 Pro and the 2020 iPad offered a superior AR performance. We were constantly amazed by how well both devices performed in a variety of conditions, outshining Android competitors in the quality of surface tracking and the overall sophistication of the AR experience, including under conditions of dim light. Sure, iOS didn’t retain memories of its surrounding for very long, and it was unable to recover in case of drift. But all other respects, the system almost puts Android at a competitive disadvantage. Were it not for the crippling limitations of the Apple ecosystem and the difficulties of using and developing applications for iOS, we would consider adopting an iOS-only approach for vGIS mobile. Until those aspects of the iOS experience improve, and notwithstanding the shortcomings of Android’s performance, Android remains our preferred mobile platform.

Despite the praiseworthy performance of the LiDAR devices, we couldn’t shake the feeling that Apple had missed an opportunity here. Of all systems we tested, the Microsoft HoloLens provided the best approach to AR. By creating and maintaining a 3D model of the physical space, HoloLens demonstrated phenomenal stability and tracking. Although there had been no clues of such a development from Apple prior to the test, we had hoped that Apple would follow the lead of Microsoft and release something similar for the LiDAR devices. Apple hasn’t done so yet. We hope that one day it will. We also hope that Google will enhance its framework in order to keep up with Apple’s advances.